Artificial Intelligence / Robots

AI-powered robot warehouse pickers are now ready to go to work

Covariant, a Berkeley-based startup, has come out of stealth and thinks its robots are ready for the big time.

In the summer of 2018, a small Berkeley-based robotics startup received a challenge. Knapp, a major provider of warehouse logistics technologies, was on the hunt for a new AI-powered robotic arm that could pick as many types of items as possible. So every week, for eight weeks, it would send the startup a list of increasingly difficult items—opaque boxes, transparent boxes, pill packages, socks—that covered a range of products from its customers. The startup team would buy the items locally and then, within the week, send back a video of their robotic arm transferring the items from one gray bin to another.

By the end of the challenge, executives at Knapp were floored. They had challenged many startups over six or seven years with no success and expected the same outcome this time. Instead, in every video, the startup’s robotic arm transferred every item with perfect accuracy and production-ready speed.

“Every time, we expected that they would fail with the next product, because it became more and more tricky,” says Peter Puchwein, vice president of innovation at Knapp, which is headquartered in Austria. “But the point was they succeeded, and everything really worked. We've never seen this quality of AI before.”

Covariant has now come out of stealth mode and is announcing its work with Knapp today. Its algorithms have already been deployed on Knapp’s robots in two of Knapp’s customers’ warehouses. One, operated by the German electrical supplier Obeta, has been fully in production since September. The cofounders say Covariant is also close to striking another deal with an industrial robotics giant.

The news signifies a change in the state of AI-driven robotics. Such systems used to be limited to highly constrained academic environments. But now Covariant says its system can generalize to the complexity of the real world and is ready to take warehouse floors by storm.

There are two categories of tasks in warehouses: things that require legs, like moving boxes from the front to the back of the space, and things that require hands, like picking items up and placing them in the right place. Robots have been in warehouses for a long time, but their success has primarily been limited to automating the former type of work. “If you look at a modern warehouse, people actually rarely move,” says Peter Chen, cofounder and CEO of Covariant. “Moving stuff between the fixed points—that’s a problem that mechatronics is really great for.”

But automating the motions of hands requires more than just the right hardware. The technology must nimbly adapt to a wide variety of product shapes and sizes in ever-changing orientations. A traditional robotic arm can be programmed to execute the same precise movements again and again, but it will fail the moment it encounters any deviation. It needs AI to “see” and adjust, or it will have no hope of keeping up with its evolving surroundings. “It’s really the dexterity part that requires intelligence,” Chen says.

In the last few years research labs have made incredible advances in combining AI and robotics to achieve such dexterity, but bringing them into the real world has been a completely different story. Labs can get away with 60% or 70% accuracy; robots in production cannot. Even with 90% reliability, a robotic arm would be a “value-losing proposition,” says Pieter Abbeel, Covariant’s cofounder and chief scientist.

To truly pay back the investment, Abbeel and Chen estimate, a robot needs to be at least 99%—and maybe even 99.5%—accurate. Only then can it operate without much human intervention or risk slowing down a production line. But it wasn’t until the recent progress in deep learning, and in particular reinforcement learning, that this level of accuracy became possible.

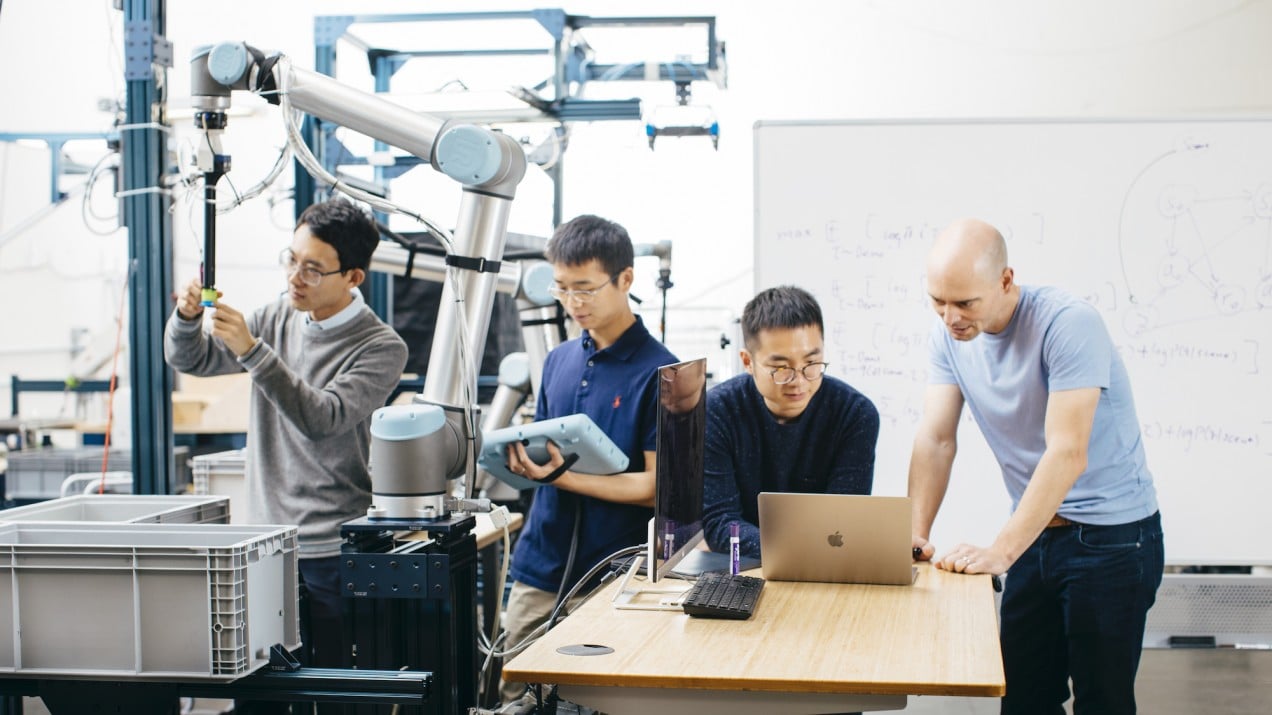

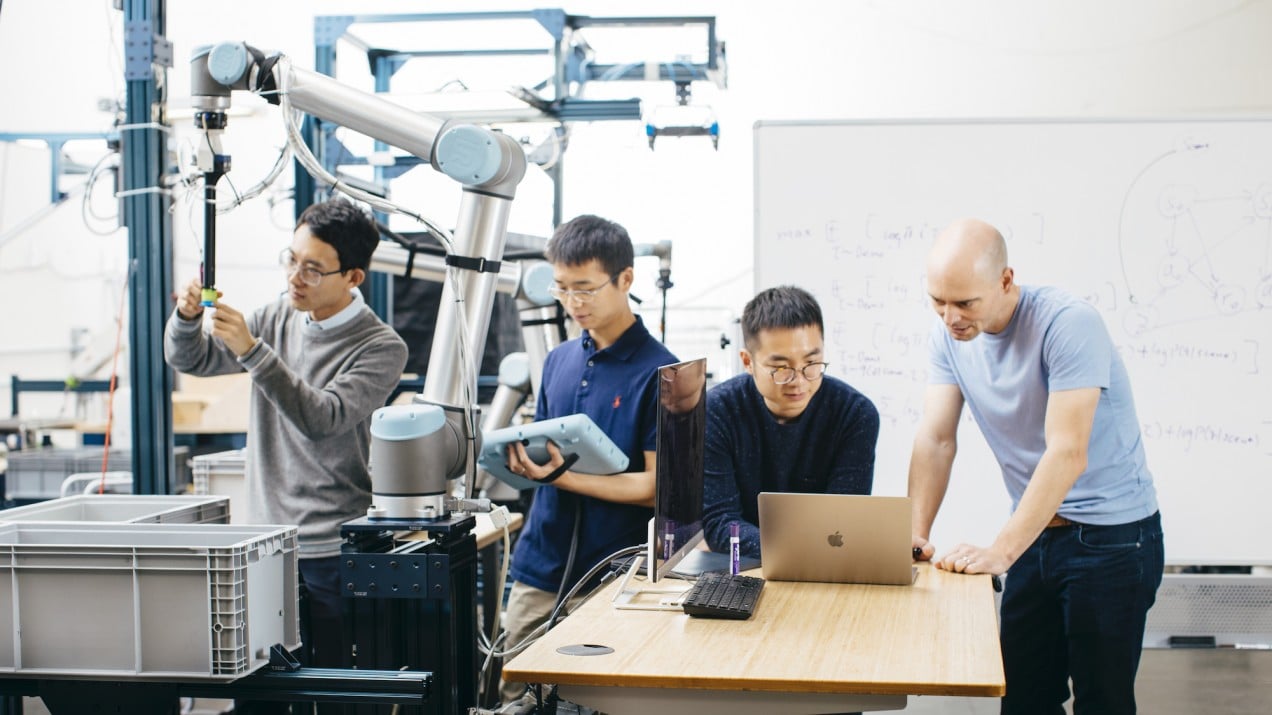

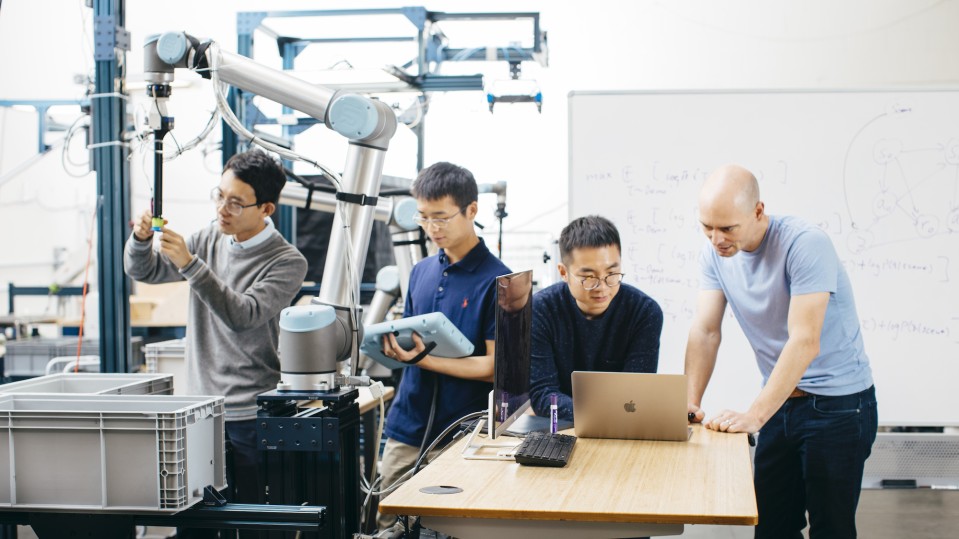

Covariant’s office is situated not far from the San Francisco Bay waterfront, off a dilapidated parking lot between a row of unmarked buildings. Inside, several industrial robots and “co-bots,” collaborative robots designed to operate safely around humans, train for every product possibility.

On a regular basis, members of Covariant’s team go on convenience store runs to buy whatever odds and ends they can find. The items range from bottled lotions to packaged clothes to eraser caps encased in clear boxes. The team especially looks for things that might trip the robot up: highly reflective metallic surfaces, transparent plastics, and easily deformable surfaces like cloth and chip bags that will look different to a camera every time.

Hanging above every robot is a series of cameras that act as its set of eyes. That visual data, along with sensor data from the robot’s body, feeds into the algorithm that controls its movements. The robots learn primarily through a combination of imitation and reinforcement techniques. The first involves a person manually guiding the robot to pick up different objects. It then logs and analyzes the motion sequences to understand how to generalize the behavior. The latter involves the robot conducting millions of rounds of trial and error. Every time the robot reaches for an item, it tries it in a slightly different way. It then logs which attempts result in faster and more precise picks versus failures, so it can continually improve its performance.

Because it is ultimately the algorithm that learns, Covariant’s software platform, called Covariant Brain, is hardware agnostic. Indeed, the office has over a dozen robots of various models, and its live deployment with Obeta uses Knapp’s hardware.

Over the course of an hour, I watched three different robots masterfully pick up all manner of store-bought items. In seconds, the algorithm analyzes their positions, calculates the attack angle and correct sequence of motions, and extends the arm to grab on with a suction cup. It moves with certainty and precision, and changes its speed depending on the delicateness of the item. Pills wrapped in foil, for example, receive gentler treatment to avoid deforming the packaging or crushing the medication. In one particularly impressive demonstration, the robot also reversed its air flow to blow a pesky bag pressed against a bin’s wall into the center for easier access.

Knapp’s Puchwein says that since the company adopted Covariant’s platform, its robots have gone from being able to pick between 10% and 15% to around 95% of Obeta’s product range. The last 5% consists of particularly fragile items like glasses, which are still reserved for careful handling by humans. “That’s not a problem,” Puchwein adds. “In the future, a typical setup should be maybe you have 10 robots and one manual picking station. That’s exactly the plan.” Through the collaboration, Knapp will distribute its Covariant-enabled robots to all of its customer’s warehouses in the next few years.

While technically impressive, the statistics also raise questions about the impact such robots will have on job automation. Puchwein admits that he anticipates hundreds or thousands of robots taking over tasks traditionally done by humans within the next five years. But, he claims, people don’t want to do the work anymore anyway. In Europe, especially, companies often struggle to find enough employees to staff their warehouses. “That’s exactly the feedback of all our customers,” he says. “They don’t find people, so they need more automation.”

Covariant has raised $27 million to date, with funders including AI luminaries like Turing Award winners Geoffrey Hinton and Yann LeCun. In addition to product picking, it wants to eventually encompass all aspects of warehouse fulfillment, from unloading trucks to packing boxes to sorting shelves. It also envisions expanding beyond warehouses into other areas and industries.

But ultimately, Abbeel has an even loftier goal: “The long-term vision of the company is to solve all of AI robotics.”