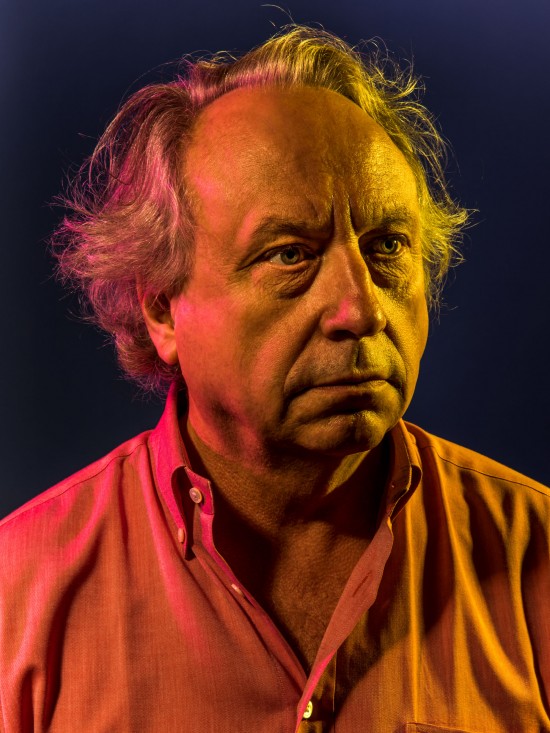

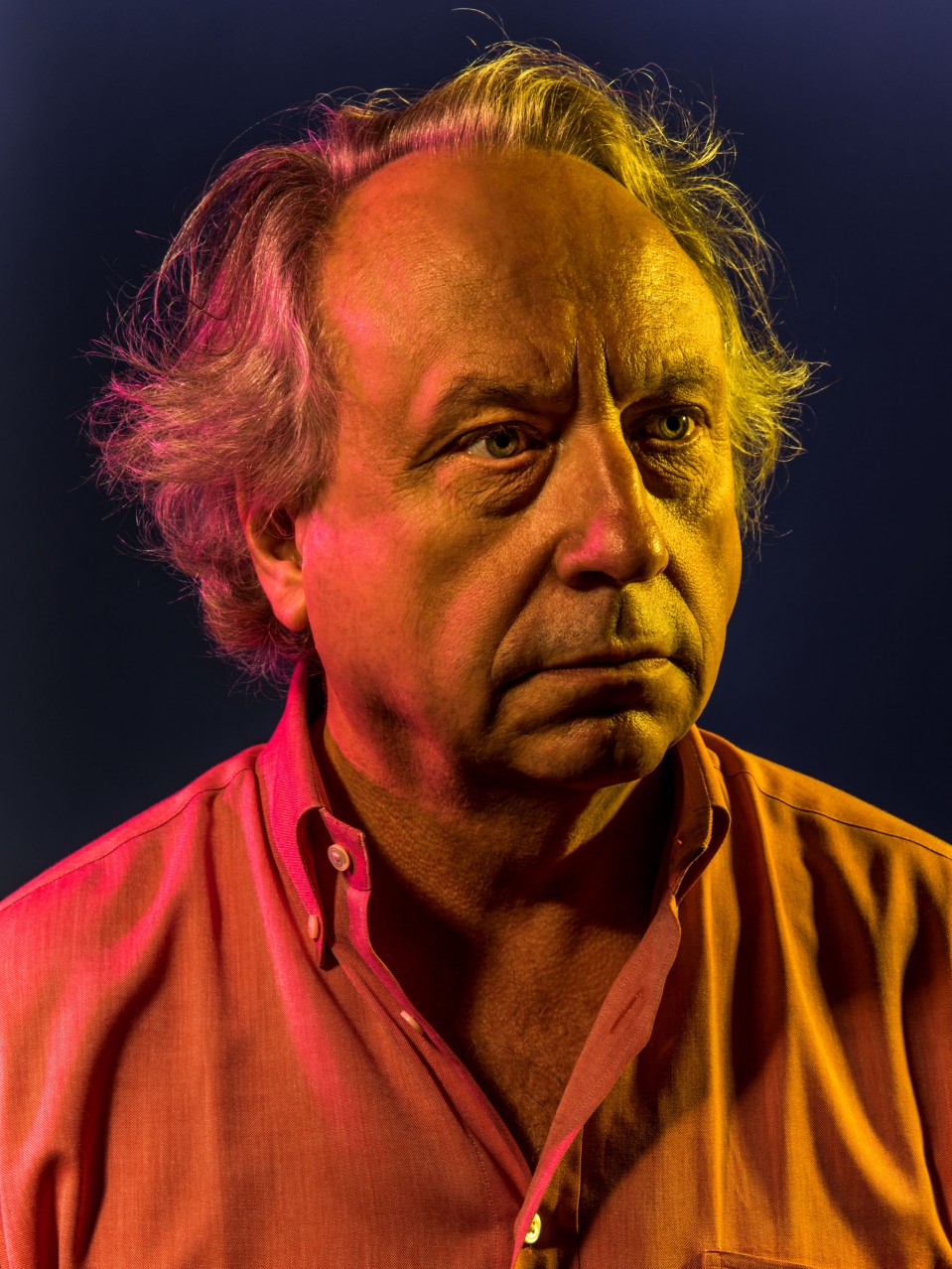

Rodney Brooks

The professor who got robots zipping through the world—and cleaning house—by challenging conventional wisdom in AI.

Rodney Brooks was hot, bored, and isolated at his in-laws’ home in Thailand when he had an inspiration that would redirect the field of robotics and lead to Roomba vacuums in millions of homes.

It was December 1984. Brooks was turning 30, and as a new member of the MIT faculty, he was trying to get robots to move about in the world. If they could, they might grant wishes from science fiction: venture into dangerous places, explore space, clean our houses.

But while stationary robot arms had been performing repetitive tasks in factories since the 1960s, mobile robots barely existed. An early example was Shakey, an ungainly computer on wheels developed by researchers at SRI International in the late 1960s and early ’70s. To navigate rooms filled with large blocks, Shakey needed so much computational power it had a wireless link to a mainframe.

Artificial-intelligence researchers tried to streamline Shakey’s general setup with algorithms that could elegantly crystallize a machine’s master-planning abilities. Progress was slow—literally. In the late 1970s, Stanford’s Hans Moravec developed a cart that would roll for a while before stopping, taking pictures, and plotting its next moves. It could avoid obstacles in a room, but it traveled a meter every 15 minutes.

Brooks was pursuing roughly similar approaches in 1984. In one project, he was figuring out how robots could mathematically account for imprecision in their movements when they updated maps of their surroundings. Before going to Thailand for a month with his then-wife and their baby son, he produced “the most boring paper in the world,” he recalls. “Full of math equations.”

Brooks, who grew up in Australia, didn’t speak Thai. His wife’s family didn’t speak English. “And when my wife was with her family, she didn’t speak English either,” he says. “So I just got to sit there. I had a lot of time to think.” Daydreaming in the tropical heat, “I’m watching these insects buzz around. And they’ve got tiny, tiny little brains, some as small 100,000 neurons, and I’m thinking, ‘They can’t do the mathematics I’m asking my robots to do for an even simpler thing. They’re hunting. They’re eating. They’re foraging. They’re mating. They’re getting out of my way when I’m trying to slap them. How are they doing all this stuff? They must be organized differently.’

“That was where things started. That was the a-ha!”

Bugs don’t assess every situation, consider various options, and then plan each movement. Instead, their brains are driven by feedback loops honed over hundreds of millions of years. Tidbits of sensory information provoke them to react in specific ways; combinations of these reactions add up to quick, assured behaviors. So when Brooks got back to Cambridge, he stopped trying to program robots with complicated mathematics and started writing software with simple rules.

The first machine he built this way, which he named Allen in honor of AI researcher Allen Newell, looked like an inverted trash can on wheels. It had sonar to sense objects, and Brooks gave it a basic instruction: don’t hit stuff. Allen would sit there until someone walked up to it; then it moved away. Next, Brooks added a second feedback loop. He told the machine to wander. Now with just some sensors and two main goals, it could weave its way through a crowded room and keep up with a slow-walking human.

Brooks added only one more layer of feedback to make Allen’s behavior substantially more complex. He told Allen to detect distant places and head toward them. This third rule could suppress the instinct to merely wander unless the first rule—Avoid obstacles!—came into effect. In that case, the robot should revert to just getting out of the way before continuing to the distant spot.

Allen did all this without first deciding to do it because each set of sensors generated sufficient feedback to adjust what the other two layers were doing. That bothered some of Brooks’s elders in AI, who had spent decades laboring on symbolic representations of thought and action for computers to process. Two prominent researchers later told Brooks that when he explained Allen at a conference, one whispered to the other, “Why is this young man throwing his career away?”

Undeterred, Brooks replicated Allen’s behaviors in toy cars named Tom and Jerry. He made Herbert, which could detect and grab soda cans. Genghis, a one--kilogram bot with six legs, could scurry over uneven terrain.

In a 1990 paper, “Elephants Don’t Play Chess,” Brooks argued that his robots revealed the shortcomings of classical AI approaches that fed complicated models of the world to disembodied electronic brains. Why not just have machines explore the world? “The world is its own best model,” Brooks wrote. “It is always exactly up to date. It always contains every detail there is to be known. The trick is to sense it appropriately and often enough.”

Classical AI researchers would point out things his simple robots could not do. But, Brooks responded, with more complex feedback, machines like his could carry out more sophisticated tasks. “Likewise it is unfair to claim that an elephant has no intelligence worth studying just because it does not play chess,” he wrote.

Brooks proved the point at iRobot, a company he founded in 1990 with two of his students, Helen Greiner ’89, SM ’90, and Colin Angle ’89, SM ’91. iRobot developed mobile robots for the US military—bots that find and destroy land mines, search through rubble, or carry gear for soldiers—and released the Roomba vacuum in 2002. Later came models that can clear your gutters, wash your floor, or scrub your pool. The company has sold 25 million robots.

Brooks was less successful with Rethink Robotics, a company he cofounded in 2008. Rethink created robots named Baxter and Sawyer that could work alongside humans in factories and packaging facilities, but demand was soft and last year the company sold off its technologies. Now Brooks is cooking up a startup called Robust.AI that will develop software for a range of robots.

Although computer scientists have made stunning progress in the past decade with neural networks and other AI techniques, Brooks still insists machines won’t become truly intelligent agents unless they also physically engage with the world. That puts him at odds with technologists who say ultra-powerful AI is imminent, but he has never minded being a contrarian.

“Science works by most people throwing away their careers,” he says. “You don’t know who it’s going to be. You make an intellectual bet, and you have to work on it for a long time, and maybe it pays.”