Artificial Intelligence

This robot can sort recycling by giving it a squeeze

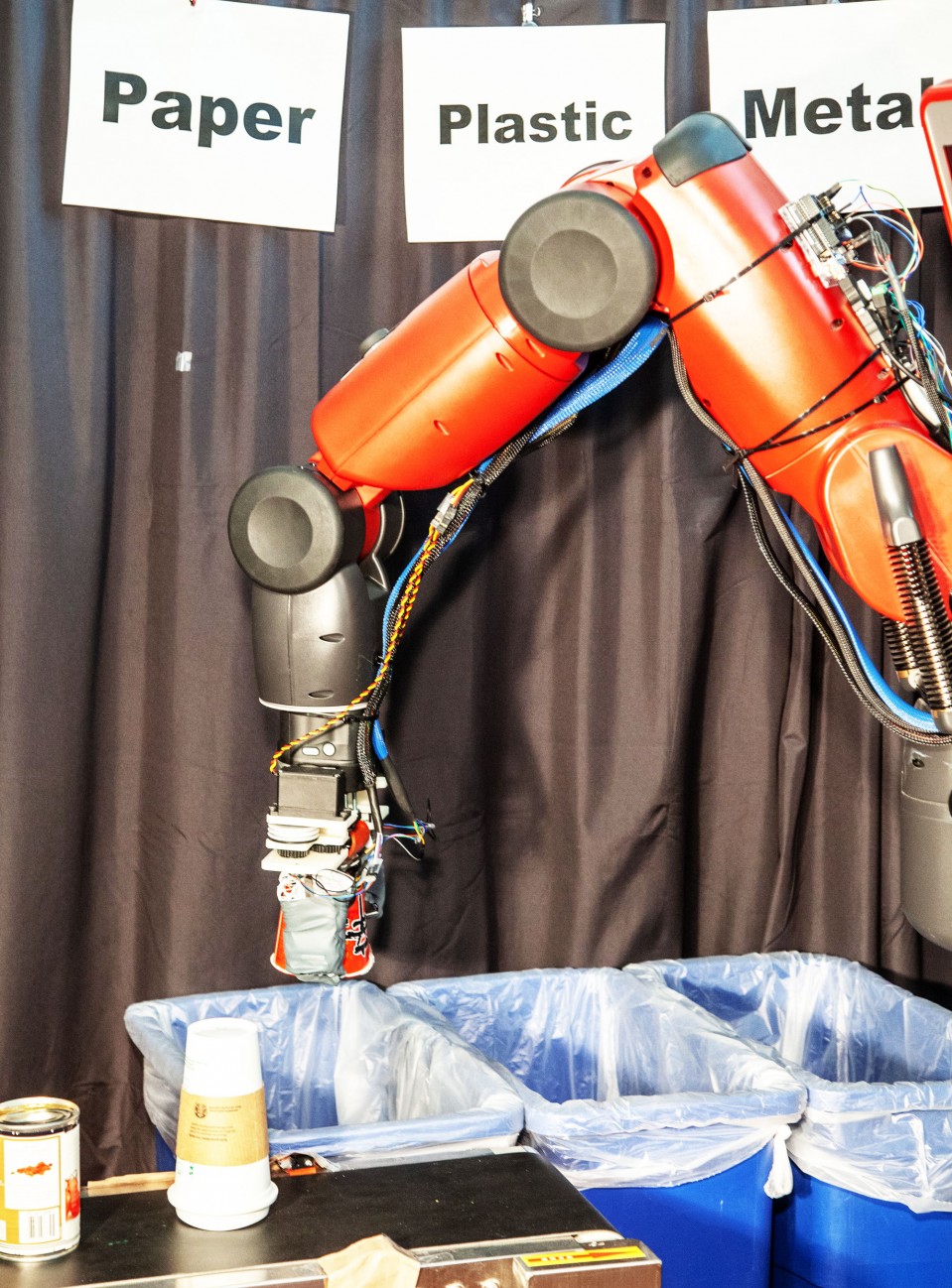

The robot, called RoCycle, uses pincers to pick through garbage and identify what materials each bit contains. It could help reduce how much waste gets sent to landfill.

Greasy pizza box, takeaway coffee cup, plastic yogurt pot—are they trash or recycling? What can and can’t be recycled is often confusing, not least because the answer depends on the facilities at your local waste processing plant. In many plants, grease-soaked cardboard or cups lined with polyethylene cannot be recycled and thus head for landfill—often taking a batch of other recycling with them.

One US waste processing company has reported that 25% of all recycling it receives is so contaminated it must be sent straight to landfills. Meanwhile, the amount of household waste rejected for recycling in England increased by 84% between 2011-2012 and 2014-2015, according to government figures. And it’s about to get worse. Much of the world’s waste is sold to China for recycling. But last month China introduced stricter standards for the amount of contamination it will accept: anything more that 0.5% impure will go in the ground.

That’s why the way we sort waste needs to get much better. Many large recycling centers already use magnets to pull out metals, and air filters to separate paper from heavier plastics. Even so, most sorting is still done by hand. It’s dirty and dangerous work.

So Lillian Chin and her colleagues at the Computer Science and Artificial Intelligence Lab at MIT have developed a robot arm with soft grippers that picks up objects from a conveyor belt and identifies what they are made from by touch.

The robot, called RoCycle, uses capacitive sensors in its two pincers to sense the size and stiffness of the materials it handles. This allows it to distinguish between different metal, plastic, and paper objects. In a mock recycling-plant setup, with objects passing on a conveyor, RoCycle correctly classified 27 objects with 85% accuracy.

Chin thinks that such robots could be used in places like apartment blocks or on university campuses to carry out first-pass sorting of people’s recycling, cutting down on contamination.

Others are developing robots that sort materials by sight. But the team believes that using touch is more accurate. “When you’re sorting through a huge stream of waste, there’s a lot of clutter and things get hidden from view,” says Chin. “You can’t really rely on image alone to tell you what’s going on.”

“The idea is neat—humans receive a lot of feedback from touch,” says Harri Holopainen at ZenRobotics, a company based in Helsinki, Finland, that makes vision-based robotic waste sorters.

The drawback is that picking up items one by one takes time. This makes RoCycle too slow for industrial recycling plants, which are expensive to run and need to process waste quickly to cover costs. Some ZenRobotics robots can sort 4,000 objects an hour, for example. Holopainen thinks RoCycle would need to work around 10 times faster to compete.

The team is working on combining its touch-based robot with a visual system to speed things up. This robot would scan objects passing by and pick up only those it wasn’t sure about.

RoCycle could also be good at identifying electrical items that have plastic cases, such as video game controllers or electronic toys. A vision-based system would only see the plastic, but RoCycle’s capacitive sensors can detect the hidden treasure underneath. The team got this working in an early prototype, but to work well it requires extra sensors, says Chin.

Once an electrical item has been detected, you need hands to dismantle it. Apple has shown off two robots—Liam in 2016 and Daisy in 2018—that can take apart an iPhone in seconds. “But that’s easy, as they know every detail of their phones,” says Holopainen.

The next generation of recycling robots will need to pull any object to pieces to get at the good bits. “Every object that has been manufactured needs to be dismantled and recycled eventually,” says Holopainen. “In the future, robots will untangle and dismantle.”