Humans and Technology / Quantified Self

Actors are digitally preserving themselves to continue their careers beyond the grave

Improvements in CGI mean neither age nor death need stop some performers from working.

From Carrie Fisher in Rogue One: A Star Wars Story to Paul Walker in the Fast & Furious movies, dead and magically “de-aged” actors are appearing more frequently on movie screens. Sometimes they even appear on stage: next year, an Amy Winehouse hologram will be going on tour to raise money for a charity established in the late singer’s memory. Some actors and movie studios are buckling down and preparing for an inevitable future when using scanning technology to preserve 3-D digital replicas of performers is routine. Just because your star is inconveniently dead doesn’t mean your generation-spanning blockbuster franchise can’t continue to rake in the dough. Get the tech right and you can cash in on superstars and iconic characters forever.

“It’s sort of a safe bet for the people with the money. It’s a familiar face,” says Ingvild Deila, who was scanned by Industrial Light and Magic for her role as Princess Leia in Rogue One. “We like to repeat what's worked in the past, so it’s part financial, part nostalgia.”

Read our entire interview with Deila about her experience working as a puppet for Carrie Fisher’s 19-year-old version of Leia.

Earlier this year Last Jedi visual-effects supervisor Ben Morris told Inverse that the Star Wars franchise is now scanning all its leads. Just in case. "We will always digitally scan all the lead actors in the film," says Morris. "We don't know if we're going to need them.”

For celebrities, these scans are a chance to make money for their families post mortem, extend their legacy—and even, in some strange way, preserve their youth.

Digital preservation

Visual-effects company Digital Domain—which has worked on major pictures like Avengers: Infinity War and Ready Player One—has also taken on individual celebrities as clients, though it hasn’t publicized the service. “We haven’t, you know, taken out any ads in newspapers to ‘Save your likeness,’” says Darren Hendler, director of the firm’s Digital Humans Group.

The suite of services that the company offers actors includes a range of different scans to capture their famous faces from every conceivable angle—making it simpler to re-create them in the future. Using hundreds of custom LED lights arranged in a sphere, numerous images can be recorded in seconds capturing what the person’s face looks like lit from every angle—and right down to the pores.

The lights can also emit different colors, emulating a variety of outdoor conditions where the digital human may be placed. This allows for more detailed and accurately colored, shaded, and reflective skin. “We capture basically how the subdermal blood flow will change in the face,” says Hendler. “We want to make sure their face is moving and the color changes that happen naturally are taking place.” The technology can also capture how an actor’s wrinkles change with different expressions, as well as how the performer walks and moves. These small details can make digital faces more believable and steer them away from a plastic-like appearance.

Digital Domain has scanned signature hairstyles, wardrobes, and props as well. The total process takes up to two days and generates five to 10 terabytes of data, depending on the extent of detail being recorded. The full selection of services can cost a million dollars.

That sort of bill is why the technology is primarily restricted to movie studios for the time being. But the few individuals who are willing to drop hundreds of thousands of dollars could be investing in the future. Getting scanned at a young age can let you continue to play younger parts, like Samuel L. Jackson in the upcoming Captain Marvel . And you could potentially bring in money for your family by licensing your image to studios after your death.

Digital resurrection

Even with all the advances in CGI, digitally re-created people don’t look perfect yet. When Rogue One brought back Peter Cushing in the role of Grand Moff Tarkin and featured a de-aged Fisher, there were mixed reactions. While it fooled some people, things still looked just a bit off to others. The faces sat in the uncanny valley—the term for the unsettling feeling you get when you look at robots or humanoids that seem almost, but not quite, human.

Beau Janzen, the education lead for visual effects at the Gnomon School of Visual Effects, Games, and Animation, says getting the movement of the body and skin just right on digital people is still a big challenge for visual artists. Minute details like how lips open or cheek skin moves as an actor talks can be giveaways that you are watching a digitally created face. Therefore, detailed and sometimes frame-by-frame adjustments have to be made if they aren’t picked up by the model generated from the live actors.

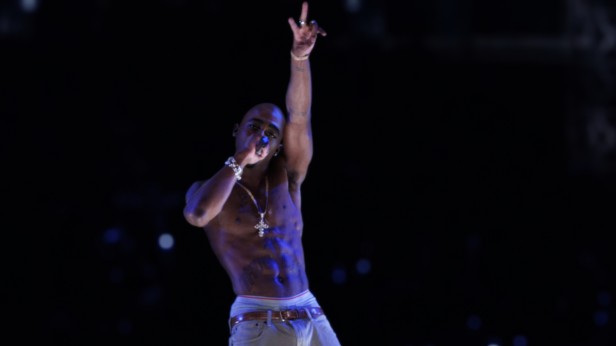

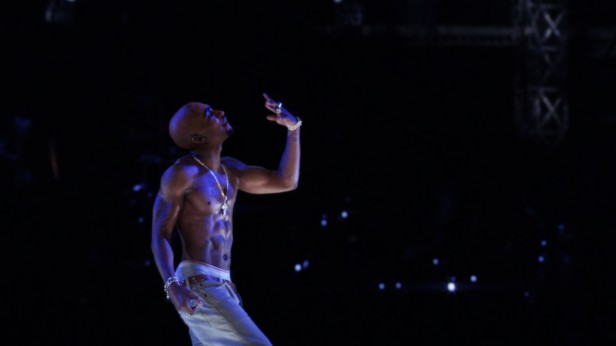

But the special effects continue to improve, and with more actors preserving their digital likeness at a young age—whether for personal use or by big-budget studios—things could get easier. Hendler estimates that Digital Domain (which is responsible for one of the most famous digital resurrections, the Tupac hologram at Coachella) has scanned 50 to 60 people.

These detailed scans are much more accurate than working from prosthetic masks or old grainy video footage to pull a deceased actor’s likeness. For Janzen, though, bringing a person back from the dead is just like any other CGI gig. For example, he frequently swaps the faces of stunt doubles with those of the lead actors and will use CGI elements to replace various aspects of actors’ bodies. “I don’t see it as this big Rubicon to cross, because there is so much going on [in movies] that the audience isn’t aware of anyway,” he says.

So where is this all headed? Will Meryl Streep be taking home an Oscar every year from now until the end of time? Unlikely, but Janzen thinks it’s inevitable that casting deceased actors will become more prevalent. “Anything you can use to make your movie better, your story better, it’s going to get used,” he says.

This story first appeared in our twice-weekly newsletter, Clocking In, which covers how technology is transforming the future of work. Sign up here—it’s free!