Artificial Intelligence / Robots

What to Know Before You Get In a Self-driving Car

Uber thinks its self-driving taxis could change the way millions of people get around. But autonomous vehicles aren’t anywhere near to being ready for the roads.

Outside a large warehouse in Pittsburgh, in an area along the Allegheny River that was once home to dozens of factories and foundries but now has shops and restaurants, I’m waiting for a different kind of technological revolution to arrive. I check my phone, look up, and notice it’s already here. A white Ford Fusion, its roof bedazzled with futuristic--looking sensors, is idling nearby. Two people sit up front—one monitoring a computer, the other behind the wheel—but the car is in control. I hop in, press a button on a touch screen, and sit back as the self-driving Uber takes me for a ride.

As we zip out onto the road toward downtown, the car stays neatly in its lane, threading deftly between an oncoming car and parked trucks that stick out into the street. I’ve been in a self-driving car before, but it’s still eerie to watch from the back seat as the steering wheel and pedals move themselves in response to events unfolding on the road around us.

To date, most automated vehicles have been tested on highways in places like California, Nevada, and Texas. Pittsburgh, in contrast, features crooked roads, countless bridges, confusing intersections, and more than its fair share of snow, sleet, and rain. As one Uber executive said, if self-driving cars can handle Pittsburgh, then they should work anywhere. As if to test this theory, as we turn onto a bustling market street, two pedestrians dart onto the road ahead. The car comes to a gentle stop some distance from them, waiting and then continuing on its way.

A screen in front of the back seat shows the car’s peculiar view of the world: our surroundings rendered in vivid colors and jagged edges. The picture is the product of some of an amazing array of instruments arranged all over the vehicle. There are no fewer than seven lasers, including a large spinning lidar unit on the roof; 20 cameras; a high-precision GPS; and a handful of ultrasound sensors. On the screen inside the car, the road looks aqua blue, buildings and other vehicles are red, yellow, and green, and nearby pedestrians are highlighted with what look like little lassos. The screen also indicates how the vehicle is steering and braking, and there’s a button that’ll ask the car to stop the ride any time you want. This being 2016, Uber has even made it possible for riders to take a selfie from the back seat. Shortly after my ride is over, I receive by e-mail a looping GIF that shows the car’s view of the world and my face grinning in the top-right corner. People on the sidewalk stop and wave while we wait at a traffic light, and a guy driving a pickup behind us keeps giving the thumbs-up.

My ride is part of the highest-profile test of self-driving vehicles to date, after Uber began letting handpicked customers book rides around Pittsburgh in a fleet of automated taxis. The company, which has already upended the taxi industry with a smartphone app that lets you summon a car, aims to make a significant portion of its fleet self-driving within a matter of years. It’s a bold bet that the technology is ready to transform the way millions of people get around. But in some ways, it is a bet that Uber has to make. In the first half of this year it lost a staggering $1.27 billion, mostly because of payments to drivers. Autonomous cars offer “a great opportunity for Uber,” says David Keith, an assistant professor at MIT who studies innovation in the automotive industry, “but there’s also a threat that someone else beats them to market.”

Most carmakers, notably Tesla Motors, Audi, Mercedes-Benz, Volvo, and General Motors, and even a few big tech companies including Google and (reportedly) Apple, are testing self-driving vehicles. Tesla cars drive themselves under many circumstances (although the company warns drivers to use the system only on highways and asks them to pay attention and keep their hands on the steering wheel). But despite its formidable competition, Uber might have the best opportunity to commercialize the technology quickly. Unlike Ford or GM, it can limit automation to the routes it thinks driverless cars can handle at first. And in contrast to Google or Apple, it already has a vast network of taxis that it can make gradually more automated over time.

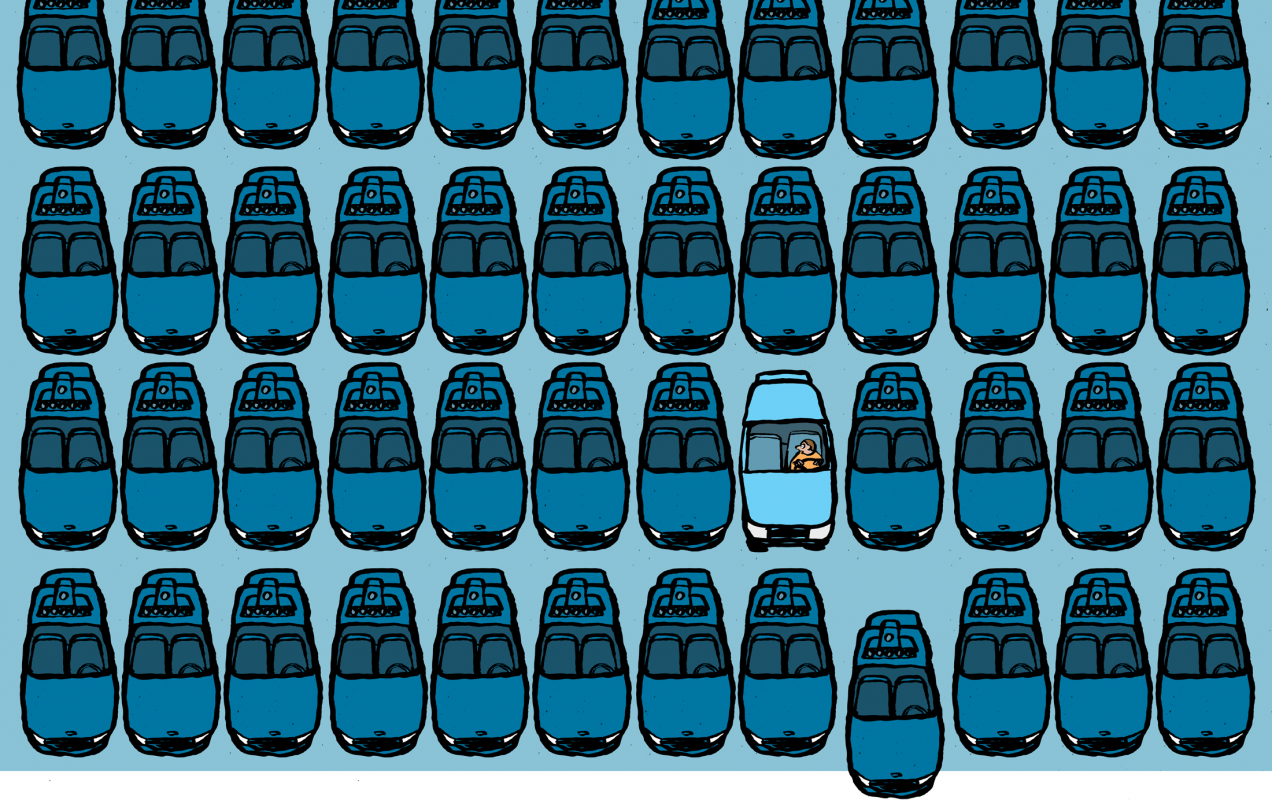

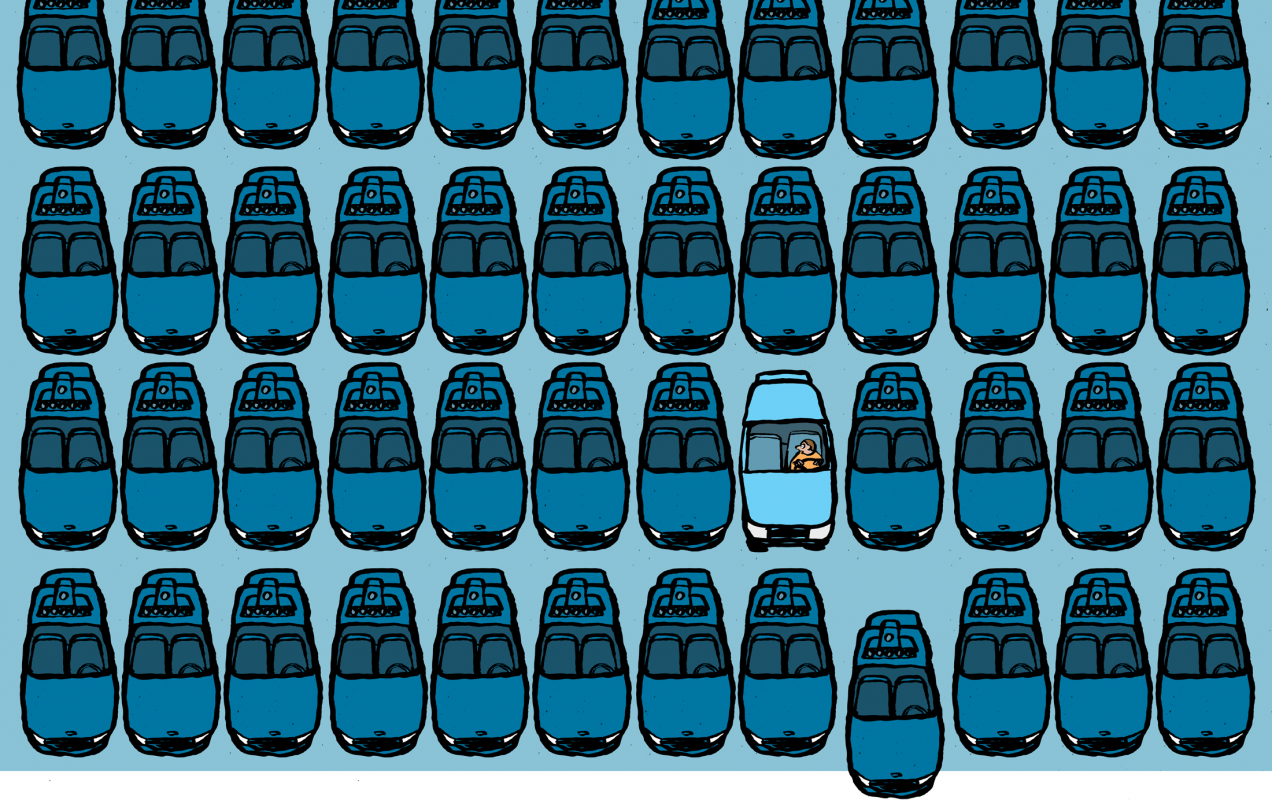

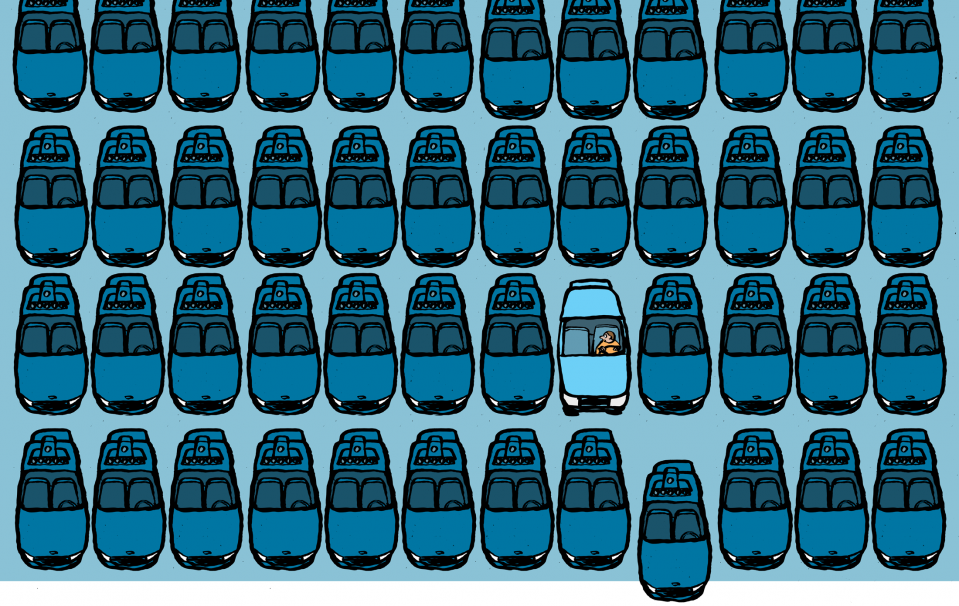

Uber’s executives have little trouble imagining the upside. With no drivers to split revenues with, Uber could turn a profit. Robot taxis could become so cheap and easy to use that it would make little sense for anyone to actually own a car. Taken to its logical conclusion, automated driving could reprogram transportation itself. Uber is already experimenting with food delivery in some cities, and it recently bought Otto, a startup that is developing automated systems for long-haul trucks. Self-driving trucks and vans could ferry goods from fulfillment centers and stores to homes and offices with dizzying speed and efficiency. Shortly before my test ride Andrew Lewandowski, head of Uber’s autonomous operations, a veteran of Google’s self-driving program, and one of the cofounders of Otto, said: “I really believe that this is the most important thing computers are going to do in the next 10 years.”

Uber is moving quickly. The company created its Advanced Technology Center, where it’s developing its driverless cars, in February 2015 by hiring a number of researchers from the robotics department at nearby Carnegie Mellon University. Using that expertise, Uber developed its self-driving taxis in a little over a year—roughly the amount of time it takes most automakers to redesign an entertainment console.

But is it moving too quickly? Is the technology ready?

Robo ancestors

For the rest of my time in Pittsburgh, I get around using Ubers controlled exclusively by humans. The contrast is stark. I want to visit CMU’s National Robotics Engineering Center (NREC)—part of its Robotics Institute, one of the pioneering research groups involved in developing self-driving vehicles—to see what its experts think of Uber’s experiment. So I catch a ride with a guy named Brian, who drives a beat-up Hyundai Sonata. Brian says he’s seen several automated Ubers around town, but he can’t imagine a ride in them being as good as one with him. Brian then takes a wrong turn and gets completely lost. To be fair, though, he weaves through traffic just as well as a self-driving car. Also, when the map on his phone leads us to a bridge that’s closed for repairs, he simply asks a couple of road workers for directions and then improvises a new route. He’s friendly, too, offering to waive the fare and buy me a beer to make up for the inconvenience. It makes you realize that automated Ubers will offer a very different experience. Fewer wrong turns and overbearing drivers, yes, but also no one to help put your suitcase in the trunk or return a lost iPhone.

I take a rain check on the beer, say good-bye to Brian, and arrive at NREC’s vast warehouse about 20 minutes late. The building is filled with fascinating robotic prototypes. And if you look carefully, you’ll find some ancestors of today’s automated vehicles. Just inside the entrance, for instance, is Terregator, a six-wheeled robot about the size of a refrigerator, with a ring of sensors on top. In 1984, Terregator was among the first robots designed to roam outside of a lab, rolling around CMU’s campus at a few miles per hour. And Terregator was succeeded, in 1986, by a heavily modified van called NavLab, one of the first fully computer-controlled vehicles on the road. Just outside the front door to NREC sits another notable forerunner: a customized Chevy Tahoe filled with computers and decorated with what looks suspiciously like an early version of the sensor stack on top of one of Uber’s self-driving cars. In 2007 this robot, called Boss, won an urban driving contest organized by the U.S. Defense Advanced Research Projects Agency. It was a big moment for automated vehicles, proving that they could navigate ordinary traffic, and just a few years later Google was testing self-driving cars on real roads.

The three of these CMU robots show how gradual the progress toward self-driving vehicles was until recently. The hardware and software improved, but the system struggled to make sense of the world a driver sees, in all its rich complexity and weirdness. At NREC, I meet William “Red” Whittaker, a CMU professor who led the development of Terregator, the first version of NavLab, and Boss. Whittaker says Uber’s new service doesn’t mean the technology is perfected. “Of course it isn’t solved,” he says. “The kinds of things that aren’t solved are the edge cases.”

And there are plenty of edge cases to contend with, including sensors being blinded or impaired by bad weather, bright sunlight, or obstructions. Then there are the inevitable software and hardware failures. But more important, the edge cases involve dealing with the unknown. You can’t program a car for every imaginable situation, so at some stage, you have to trust that it will cope with just about anything that’s thrown at it, using whatever intelligence it has. And it’s hard to be confident about that, especially when even the smallest misunderstanding, like mistaking a paper bag for a large rock, could lead a car to do something unnecessarily dangerous.

Progress has undoubtedly picked up in recent years. In particular, advances in computer vision and machine learning have made it possible for automated vehicles to do more with video footage. If you feed enough examples into one of these systems, it can do more than spot an obstacle—it can identify it with impressive accuracy as a pedestrian, a cyclist, or an errant goose.

Still, the edge cases matter. The director of NREC is Herman Herman, a roboticist who grew up in Indonesia, studied at CMU, and has developed automated vehicles for defense, mining, and agriculture. He believes self--driving cars will arrive, but he raises a few practical concerns about Uber’s plan. “When your Web browser or your computer crashes, it’s annoying but it’s not a big deal,” he says. “If you have six lanes of highway, there is an autonomous car driving in the middle, and the car decides to make a left turn—well, you can imagine what happens next. It just takes one erroneous command to the steering wheel.”

Another problem Herman foresees is scaling the technology up. It’s all very well having a few driverless cars on the road, but what about dozens, or hundreds? The laser scanners found on Uber’s cars might interfere with one another, he says, and if those vehicles were connected to the cloud, that would require an insane amount of bandwidth. Even something as simple as dirt on a sensor could pose a problem, he says. “The most serious issue of all—and this is a growing area of research for us—is how you verify, how you test an autonomous system to make sure they’re safe,” says Herman.

Learning to drive

For a more hands-on perspective, I head across town to talk to people actually developing self-driving cars. I visit Raj Rajkumar, a member of CMU’s robotics faculty who runs a lab funded by GM. In the fast-moving world of research into driverless cars, which is often dominated by people in Silicon Valley, Rajkumar might seem a bit old school. Wearing a gray suit, he greets me at his office and then leads me to a basement garage where he’s been working on a prototype Cadillac. The car contains numerous sensors, similar to the ones found on Uber’s cars, but they are all miniaturized and hidden away so that it looks completely normal. Rajkumar is proud of his progress on making driverless cars practical, but he warns me that Uber’s taxis might be raising hopes unreasonably high. “It’s going to take a long time before you can take the driver out of the equation,” he says. “I think people should mute their expectations.”

“We are cognitive, sentient beings. We comprehend, we reason, and we take action. When you have automated vehicles, they are just programmed to do certain things for certain scenarios.”

Besides the reliability of a car’s software, Rajkumar worries that a driverless vehicle could be hacked. “We know about the terror attack in Nice, where the terrorist driver was mowing down hundreds of people. Imagine there’s no driver in the vehicle,” he says. Uber says it takes this issue seriously; it recently added two prominent experts on automotive computer security to its team. Rajkumar also warns that fundamental progress is needed to get computers to interpret the real world more intelligently. “We as humans understand the situation,” he says. “We are cognitive, sentient beings. We comprehend, we reason, and we take action. When you have automated vehicles, they are just programmed to do certain things for certain scenarios.”

In other words, the colorful picture I saw in the back of my automated Uber represents a simplistic and alien way of understanding the world. It shows where objects are, sometimes with centimeter precision, but there’s no understanding what those things really are or what they might do. This is more important than it might sound. An obvious example is how people react when they see a toy sitting in the road and conclude that a child might not be far away. “The additional trickiness is that Uber makes most of its money in urban and suburban locations,” Rajkumar says. “That’s where unexpected situations tend to arrive more often.”

What’s more, anything that goes wrong with Uber’s experimental taxi service could have ramifications for the entire industry. The first fatal crash involving an automated driving system, when a Tesla in Autopilot mode failed to spot a large truck on a Florida highway this spring, has already raised safety questions. Hastily deploying any technology—even one meant to make the roads safer—might easily trigger a backlash. “While Uber has done a great job of promoting this as a breakthrough, it’s still quite a way away, realistically,” says MIT’s Keith. “Novel technologies depend on positive word of mouth to build consumer acceptance, but the opposite can happen as well. If there are terrible car crashes attributed to this technology, and regulators crack down, then certainly that would moderate people’s enthusiasm.”

I get to experience the reality of the technology’s limits firsthand, about halfway through my ride in Uber’s car, shortly after I’m invited to sit in the driver’s seat. I push a button to activate the automated driving system, and I’m told I can disengage it at any time by moving the steering wheel, touching a pedal, or hitting another big red button. The car seems to be driving perfectly, just as before, but I can’t help noticing how nervous the engineer next to me now is. And then, as we’re sitting in traffic on a bridge, with cars approaching in the other direction, the car begins slowly turning the steering wheel to the left and edging out into the oncoming lane. “Grab the wheel,” the engineer shouts.

Maybe it’s a bug, or perhaps the car’s sensors are confused by the wide-open spaces on either side of the bridge. Whatever the case, I quickly do as he says.

Advertisement