Seeing in the Rain

Smart headlights could make difficult driving conditions safer

Source: “Fast Reactive Control for Illumination Through Rain and Snow”

Raoul de Charette et al.

IEEE International Conference on Computational Photography, Seattle, Washington, April 27–29, 2012

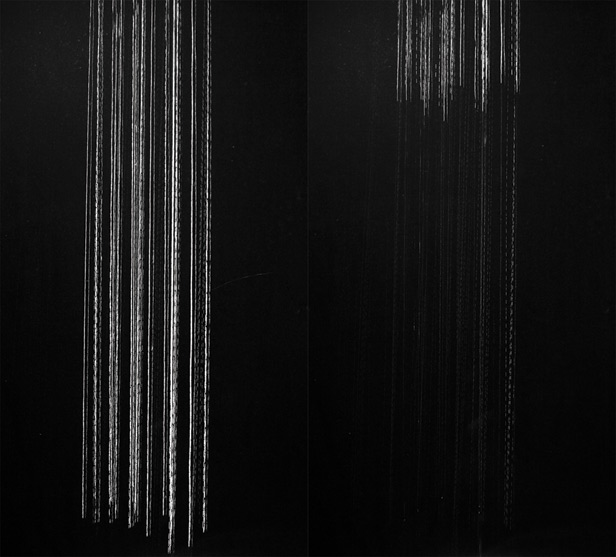

Results: Researchers at Carnegie Mellon University have written software that allowed a projector to shine light through simulated rain without illuminating the water drops. The system used a camera to predict the downward path of droplets as they entered the top of the projector’s beam. Then software directed the projector to selectively black out its beam to avoid the droplets as they fell. In one test, the system could avoid lighting up 84 percent of the droplets.

Why it matters: The experiment suggests that future lighting systems for cars could reduce glare reflecting off falling raindrops or snowflakes. Those reflections often distract drivers and can lead to accidents.

Methods: The researchers paired a high-speed monochrome camera and an off-the-shelf projector. They used a mirror to allow the camera to view the scene in front of the projector from the perspective of its lens. This makes it easier for the software to predict what the projector needs to do to avoid the water drops, since no correction is needed for perspective and viewpoint. They also wrote tracking algorithms that could speed up detection of the droplets.

Next Steps: For such a system to be practical, headlights would need to be based on LEDs, as they already are in some cars today. Arrays of LEDs would allow fine control of a headlight’s beam, mimicking the effect of a projector. The system also needs to be faster to be able to handle raindrops approaching a car as it travels at high speeds. The researchers anticipate that cameras, LED light sources, and a processor could eventually be built on the same chip, allowing such a system to sense and react to falling drops extremely quickly.

Sound-Based Gesture Control

Any computer can be controlled with the wave of a hand

Source: “SoundWave: Using the Doppler Effect to Sense Gestures”

Desney Tan et al.

ACM SIGCHI Conference on Human Factors in Computing Systems, Austin, Texas, May 5–10, 2012

Results: Software developed by researchers at Microsoft and the University of Washington uses a computer’s speakers and microphone to sense arm gestures and other movements of a person’s body. The speakers emit a high-pitched tone between 18 and 22 kilohertz, inaudible to most people, and the microphone picks up sound reflections so the software can analyze them. The researchers showed that a hand wave can be used to scroll through a document or flip through a photo album. The computer can also sense when a user walks away and switch into a low-power state.

Why it matters: Devices that use cameras or accelerometers to sense movements, such as Microsoft’s Kinect and Nintendo’s Wii, demonstrate that gestures can be a useful, powerful, and fun way to control a computer. Gesture control could become more widespread if it could be used without a special camera or hand-held sensor.

Methods: The researchers programmed the software to analyze reflected sound for several clues that help it recognize a person’s gestures. It makes use of the Doppler effect to determine the direction of movement. The researchers also designed a set of hand gestures that could be readily distinguished using those signals, and the software was shown to work reliably on several desktops and laptops with different sound hardware.

Next Steps: The researchers plan to develop more sophisticated mathematical techniques to allow recognition of a wider range of gestures. They also hope to make a version of the software for mobile devices; preliminary tests show that the same approach should work. The accuracy of detection could potentially be improved by making use of the multiple speakers and microphones on some devices to emit and detect more complex tones and reflections.