Business Impact

The Computing Trend that Will Change Everything

Computing isn’t just getting cheaper. It’s becoming more energy efficient. That means a world populated by ubiquitous sensors and streams of nanodata.

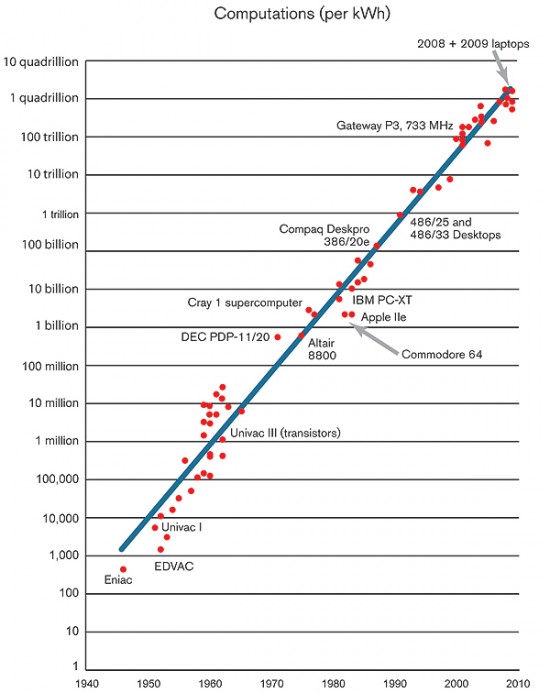

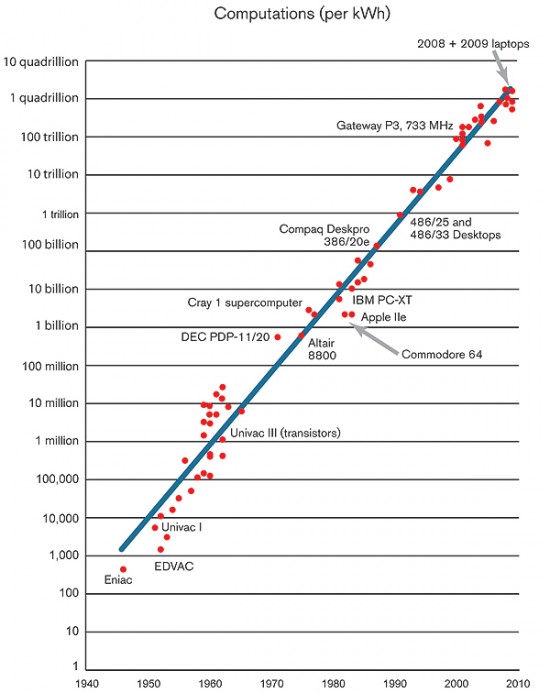

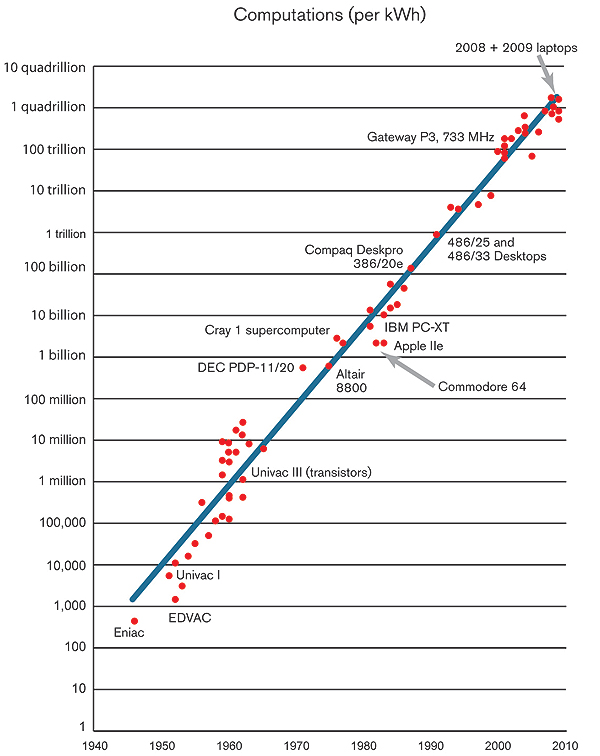

The performance of computers has shown remarkable and steady growth, doubling every year and a half since the 1970s. What most folks don’t know, however, is that the electrical efficiency of computing (the number of computations that can be completed per kilowatt-hour of electricity used) has also doubled every year and a half since the dawn of the computer age.

Laptops and mobile phones owe their existence to this trend, which has led to rapid reductions in the power consumed by battery-powered computing devices. The most important future effect is that the power needed to perform a task requiring a fixed number of computations will continue to fall by half every 1.5 years (or a factor of 100 every decade). As a result, even smaller and less power-intensive computing devices will proliferate, paving the way for new mobile computing and communications applications that vastly increase our ability to collect and use data in real time.

As one of many examples of what is becoming possible using ultra-low-power computing, consider the wireless no-battery sensors created by Joshua R. Smith of the University of Washington. These sensors harvest energy from stray television and radio signals and transmit data from a weather station to an indoor display every five seconds. They use so little power (50 microwatts, on average) that they don’t need any other power source.

Harvesting background energy flows, including ambient light, motion, or heat, opens up the possibility of mobile sensors operating indefinitely with no external power source, and that means an explosion of available data. Mobile sensors expand the promise of what Erik Brynjolfsson, a professor of management at MIT calls “nanodata,” or customized fine-grained data describing in detail the characteristics of individuals, transactions, and information flows.

How long can this trend continue? In 1985, the physicist Richard Feynman calculated that the energy efficiency of computers could improve over then-current levels by a factor of at least a hundred billion (1011), and our data indicate that the efficiency of computing devices progressed by only about a factor of 40,000 from 1985 to 2009. In other words, we’ve hardly begun to tap the full potential.

To put the matter concretely, if a modern-day MacBook Air operated at the energy efficiency of computers from 1991, its fully charged battery would last all of 2.5 seconds. Similarly, the world’s fastest supercomputer, Japan’s 10.5-petaflop Fujitsu K, currently draws an impressive 12.7 megawatts. That is enough to power a middle-sized town. But in theory, a machine equaling the K’s calculating prowess would, inside of two decades, consume only as much electricity as a toaster oven. Today’s laptops, in turn, will be matched by devices drawing only infinitesimal power.

The phenomenon identified here drives the energy efficiency of all silicon-based devices, but no one has yet determined whether the efficiency of data transmission—the energy cost to sensors of sending out wireless signals, for instance—progresses at comparable rates. Design choices about the speed of information transmittal, the frequency of communication, and the ways in which these devices reduce their power when not performing tasks all have a significant effect on the overall electricity use of mobile devices. But the effect of efficiency improvements in computing is to drive innovations in these other areas, because that’s the only way to capture the full benefit of new computing and sensing technologies.

The long-term increase in the energy efficiency of computing (and the technologies it makes possible) will revolutionize how we collect and analyze data and how we use data to make better decisions. It will help the “Internet of things” become a reality—a development with profound implications for how businesses, and society generally, will develop in the decades ahead. It will enable us to control industrial processes with more precision, to assess the results of our actions quickly and effectively, and to rapidly reinvent our institutions and business models to reflect new realities. It will also help us move toward a more experimental approach to interacting with the world: we will be able to test our assumptions with real data in real time, and modify those assumptions as reality dictates.

Historically, the best computer scientists and chip designers focused on the cutting-edge problems of high-performance computing, and no doubt many will still be tempted to address those issues. But continuous progress in the energy efficiency of computing is now drawing top designers and engineers to tackle a new kind of problem—one defined by whole-system integrated design, elegant frugality in the use of electricity and the transmittal of data, and the real possibility of transforming humanity’s relationship to the universe. I, for one, am delighted to see them take up that challenge.

Jonathan Koomey is an author, an entrepreneur, and a consulting professor at Stanford University. He is the author of Cold Cash, Cool Climate: Science-Based Advice for Ecological Entrepreneurs.

If the energy efficiency of computing continues its historical rate of change, it will increase by a factor of 100 over the next decade, with consequent improvements in mobile computing, sensors, and controls. What new applications and products could become possible with such a large efficiency improvement 10 years hence? What other innovations would be necessary in order for such technologies to be used more effectively?