Neural networks have been used to turn words that a human has heard into intelligible, recognizable speech. It could be a step toward technology that can one day decode people’s thoughts.

A challenge: Thanks to fMRI scanning, we’ve known for decades that when people speak, or hear others, it activates specific parts of their brain. However, it’s proved hugely challenging to translate thoughts into words. A team from Columbia University has developed a system that combines deep learning with a speech synthesizer to do just that.

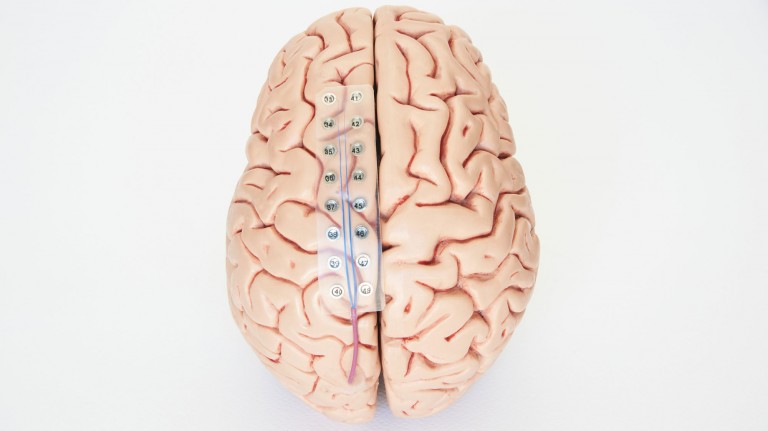

The study: The team temporarily placed electrodes in the brains of five people scheduled to have brain surgery for epilepsy. (People who have this procedure often have implants fitted to learn more about their seizures.) The volunteers were asked to listen to recordings of sentences, and their brain activity was used to train deep-learning-based speech recognition software. Then they listened to 40 numbers being spoken. The AI tried to decode what they had heard on the basis of their brain activity—and then spoke the results out loud in a robotic voice. What the voice synthesizer produced was understandable as the right word 75% of the time, according to volunteers who listened to it. The results were published in Scientific Reports today (and you can listen to the recordings here.)

The aim? At the moment the technology can only reproduce words that these five patients have heard—and it wouldn't work on anyone else. But there is the hope that technology like this could one day let people who have been paralyzed communicate with their family and friends, despite losing the ability to speak.

(For more stories like this, why not sign up for our daily newsletter, The Download.)